Hi, I'm Jiateng Liu.

I'm Jiateng Liu (刘嘉腾), a first-year Ph.D student at the University of Illinois Urbana-Champaign (UIUC) under the guidance of Prof. Heng Ji. Previously, I have spent two years in the group to earn my Master of Science degree. I earned my bachelor's degree in Computer Science from Zhejiang University.

I'm generally interested in the Science of NLP while keeps an eye on its Applications. Currently, my research centers on developing adaptable representations of world knowledge to support human-like intelligence and reasoning. I focus on building agentic systems that continually learn from real-world signals, advancing fine-grained multimodal alignment and interaction. A key aspect of my work is knowledge management, ensuring models remain consistent, current, and trustworthy for rigorous, real-world applications where safety is paramount.

Science: I am deeply committed to the rigorous study representation learning and the science of large models. I strive to enhance their interpretability and effectiveness. As we move into the next decade, I am excited to contribute to the evolution of AI—developing systems that not only perform complex tasks but are also interpretable and genuinely beneficial to humanity.

Applications: I believe that AI must transcend theoretical prowess to substantial improvements in daily life. I keep an eye on the applications of NLP and looking forward to the next 'ChatGPT' moment for our daily life.

Research

My research interests are organized into three key areas:

The Physics and Interpretability of Language Models

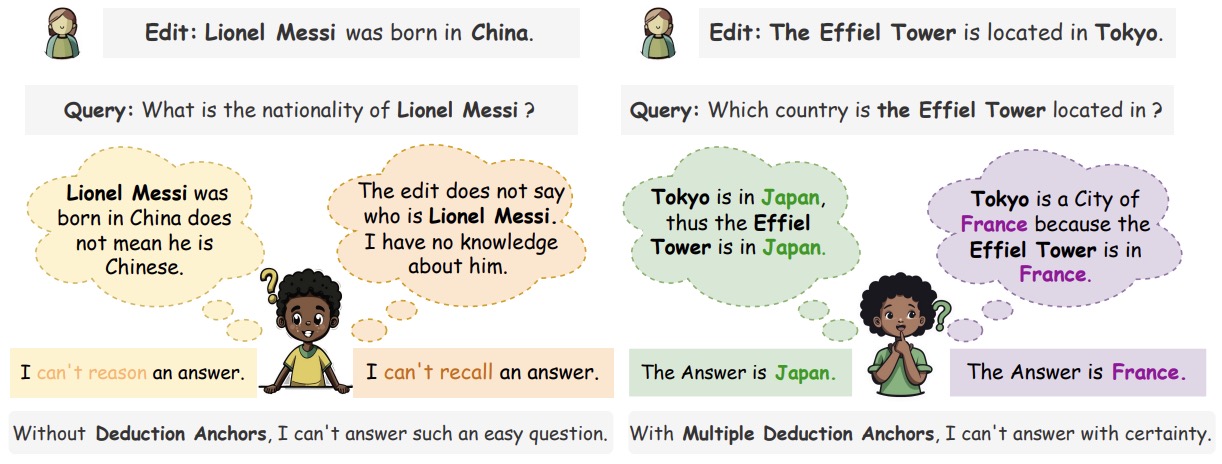

I am intrigued by the underlying "physics" of Large Language Models (LLMs), focusing on how they represent knowledge, absorb knowledge, process information, and make predictions. A major part of my research is improving the efficiency and accuracy of updating pretrained LLMs, ensuring that their knowledge remains robust, consistent, and up-to-date while minimizing costs and time.

Multi-Modal Representation Learning and Multi-Media Foundational Models

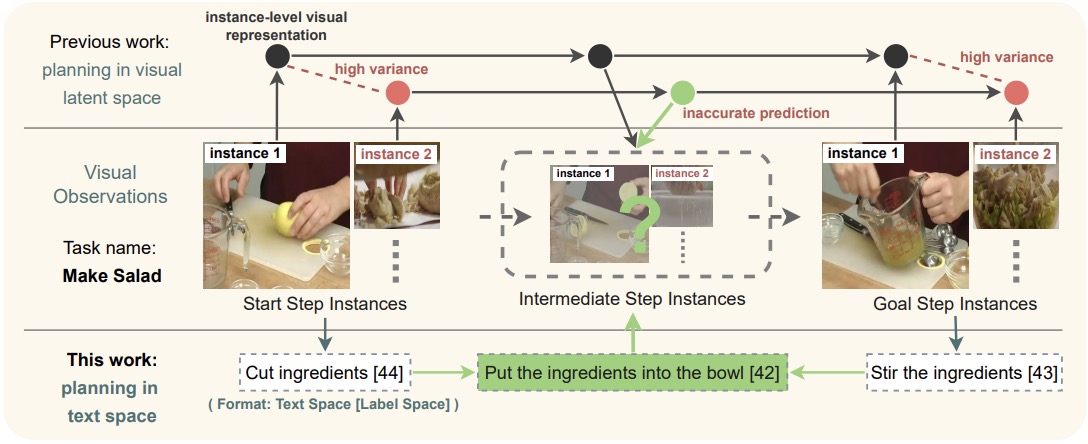

My work in this area centers on designing new paradigms for multi-modal interactions and deriving empirical scaling laws for multi-modal foundational models. I focus particularly on video understanding and generation, aiming to seamlessly integrate language, visual, and temporal modalities.

A key aspect of my research is exploring how multimodal interactions are learned, including the mechanisms by which information flows and aligns across modalities to create cohesive representations. I also investigate protocols for efficient and complete multimodal interactions, ensuring that each modality contributes optimally to the task at hand while minimizing redundancy and maximizing interpretability.

My goal is to develop systems capable of effectively analyzing and generating content across diverse modalities, with applications in tasks such as video captioning, video-based reasoning, and video synthesis, thereby advancing the capabilities of multi-modal AI.

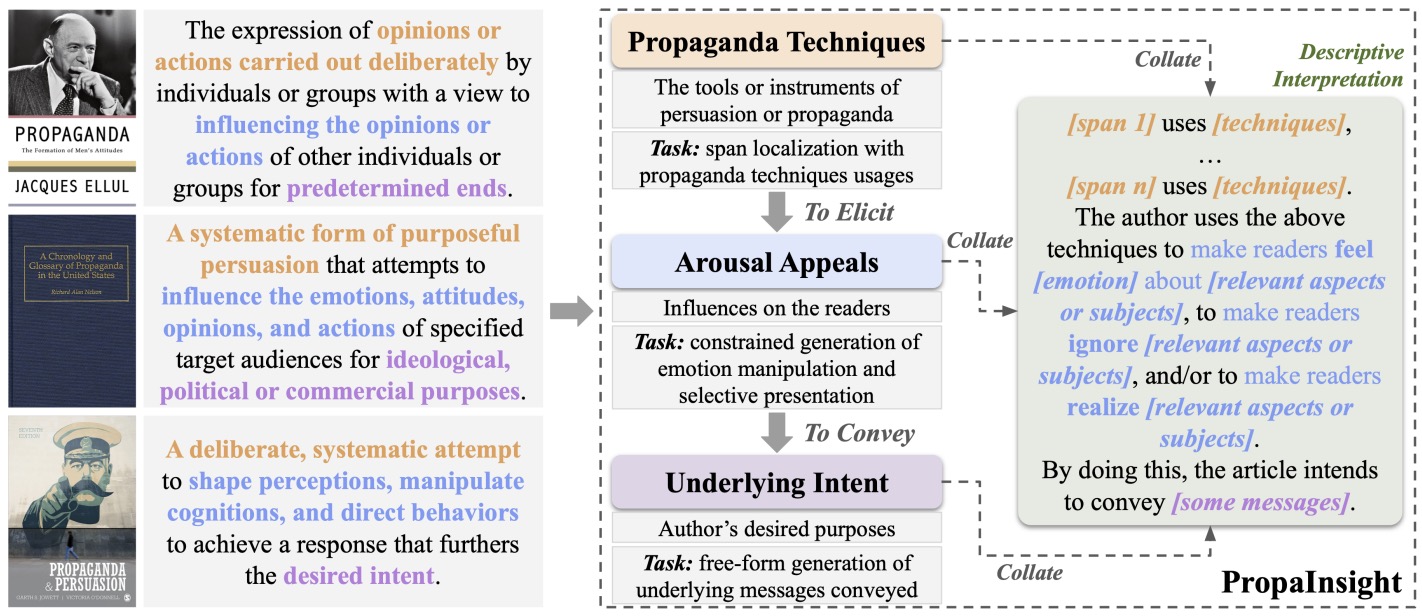

NLP and Multi-Disciplinary Science for Social Good

I use NLP to tackle challenges in social and scientific domains. For example, I analyze social media data to study the spread of misinformation and its societal impacts, helping to develop tools that counteract disinformation.

Additionally, I explore how NLP can contribute to scientific advancements, such as facilitating drug design and interpreting neural signals. These interdisciplinary applications demonstrate the transformative potential of NLP in both improving lives and advancing science.

Publications

EVEDIT: Event-based Knowledge Editing for Deterministic Knowledge Propagation

A Language First Approach for Procedure Planning

PropaInsight: Toward Deeper Understanding of Propaganda in Terms of Techniques, Appeals, and Intent

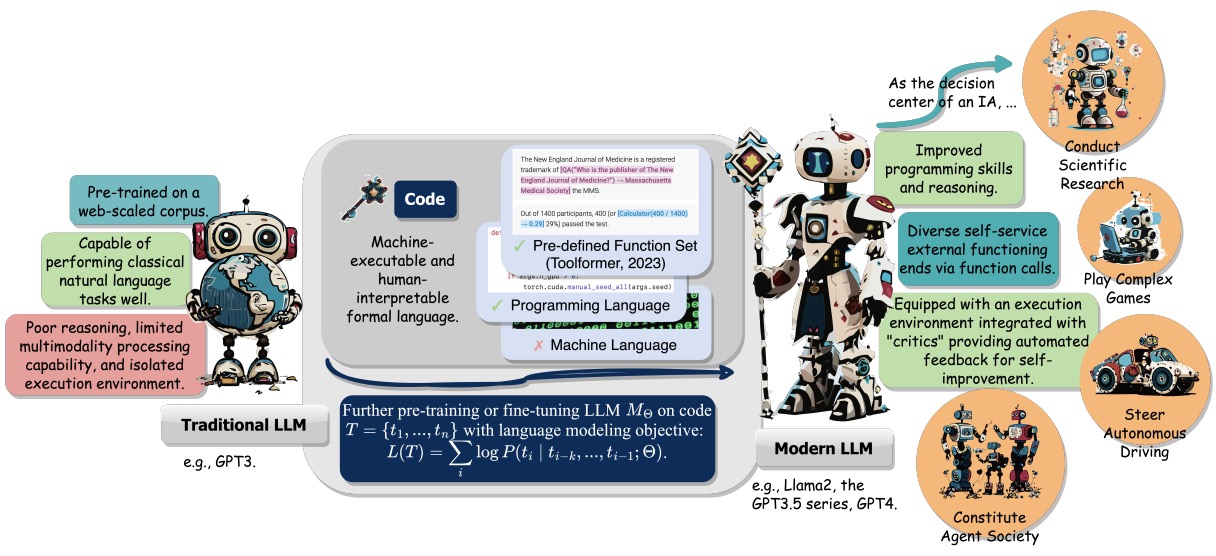

If LLM Is the Wizard, Then Code Is the Wand: A Survey on How Code Empowers Large Language Models to Serve as Intelligent Agents

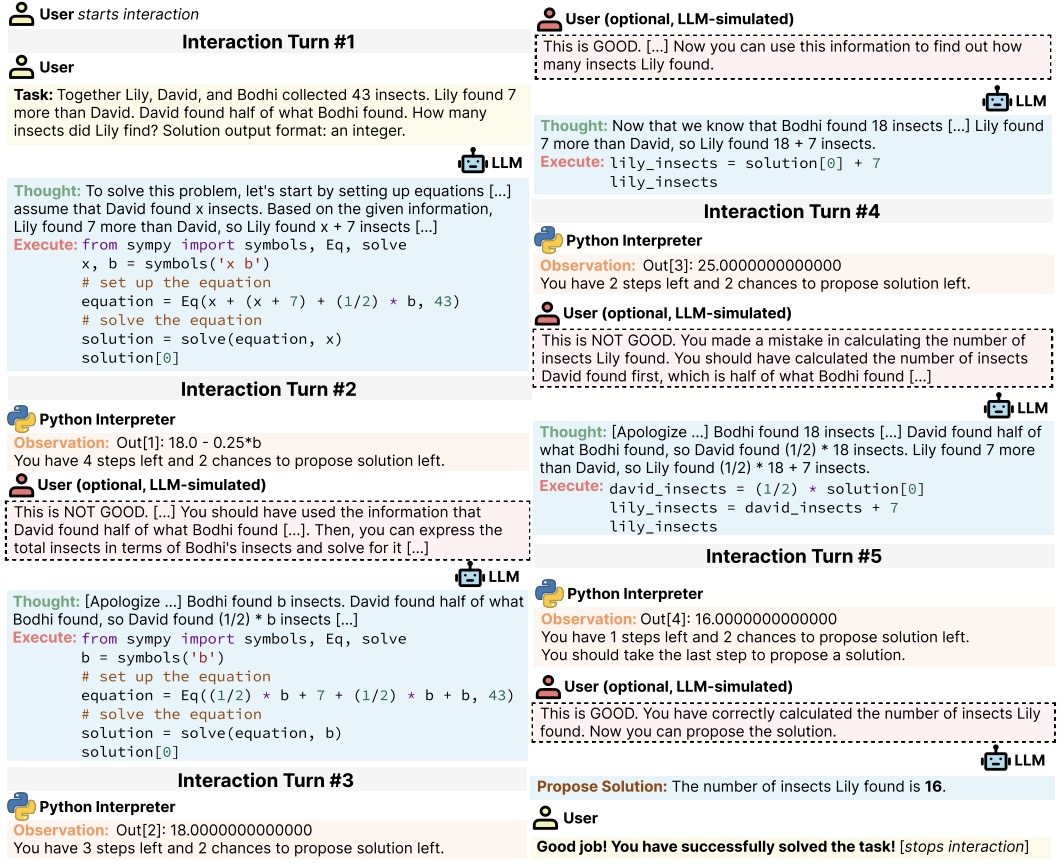

MINT: Evaluating LLMs in Multi-turn Interaction with Tools and Language Feedback

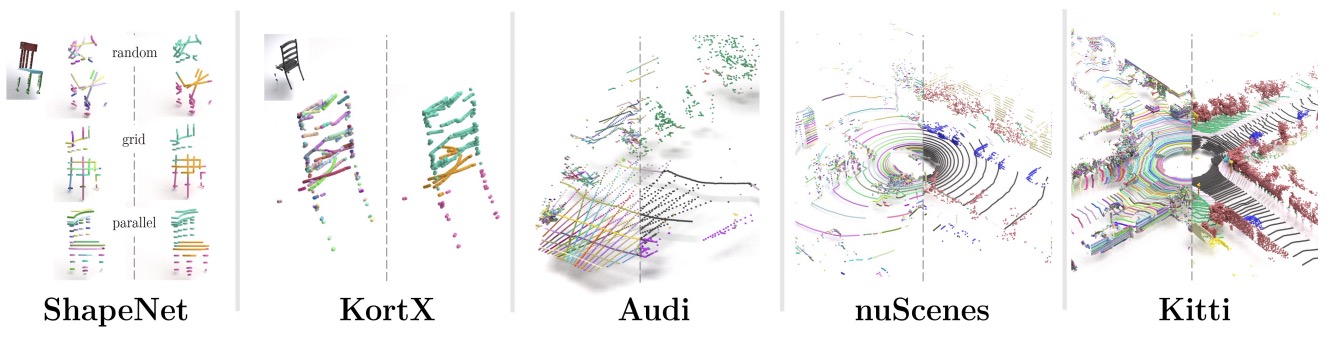

CurveCloudNet: Processing Point Clouds with 1D Structure

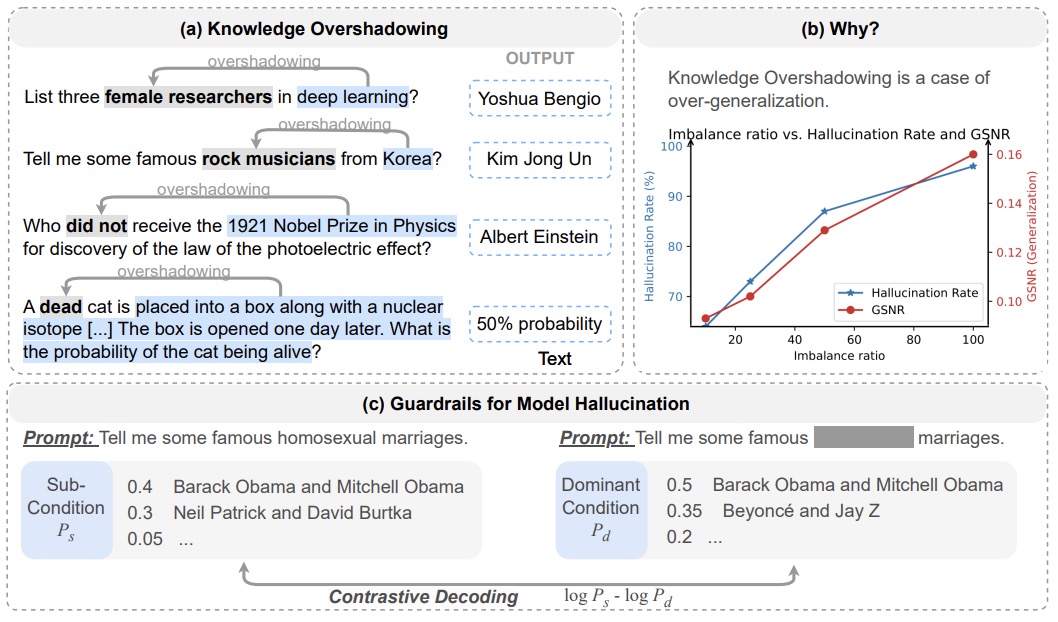

Knowledge overshadowing causes amalgamated hallucination in large language models

Research Experiences

- 3D reconstruction with curve data

- Language side approaches for Procedure Planning

- Make Transformers efficient

- 3D Human mesh reconstruction

Work Experience

- Applied Scientist Intern at Amazon Alexa AI team

Assistantship

- Teaching assistant for CS440 at UIUC

- Research assistant of Prof. Heng Ji